How many processors (or other resources) should I request per job?

How many resources you request per job is entirely up to you. You need to decide how many resources make sense in your situation, and a great deal depends on the software you're using, how many individual cases you need to run, etc.

General Principles

Nature of the software

First and foremost, know what your software is capable of taking advantage of. If your software is only written to use a single processor, then only request a single processor. If your software can take advantage of more than one processor on a single node, but can't go beyond one node, then only request up to one node. If your software can utilize resources on multiple nodes, then feel free to request multiple nodes. If you request more resources than your software can use, then the system will still reserve them for you, meaning that nobody else will get them until your job is done, but they won't actually help your job run any faster.

Scalability - Linear, sublinear, or superlinear

We need to explain the concept of scalability. This refers to the way a particular piece of software reacts to an increase in resources, usually processor count. We use this to group software by its scalability into linear, superlinear, or sublinear. Most software is sublinear, with a few reaching linear, and the very, very rare one being superlinear. Its generally a good assumption that a program is sublinear, but you should do some tests to determine how your program scales. The scalability of your program will have a big effect on how many resources you should request, as shown in the example below.

For example, if a process takes 60 minutes on 1 processor, and takes 30 minutes on 2 processors, and 15 minutes on 4 processors, we say that it scales linearly. Notice that in this example that doubling the number of processors, cuts the running time in half.

If a piece of software's running time decreases slower than the increase in processors, we call that sublinear. For example, consider a process that takes 60 minutes on 1 processor, 45 minutes on 2 processors, and 30 minutes on 4 processors. We're still doubling the number of processors, but the running time is being cut by less than half. This process would be sublinear.

If a piece of software's running time decreases faster than the increase in processors, we call that superlinear. For example, a process that takes 60 minutes on 1 processor, 20 minutes on 2 processors, and 5 minutes on 4 processors, is superlinear, since as the number of processors doubles, the running time is cut by more than half. This type of process is very rare.

Scalability Limits

You should also be aware that many programs speed up smoothly to a certain point, and then they either quit speeding up, or they actually begin to slow down. Where this limit lands, will depend on the software. Once you find this limit, you should not request any more resources per job than that. Even if it doesn't actually slow down, but just runs the same amount of time, then you're still using more resources than you need to accomplish what you're doing.

What's more important: Speeding up individual cases, or getting the most cases through?

You also need to consider what your primary purpose of using the Office of Research Computing is. Some users simply want to speed up the time it takes to complete processing individual cases. These users generally have relatively few cases to process, and they take days or sometimes weeks to process. For these people, any speedup is good, even if it is disproportionate to the resources being consumed. These people will likely request the highest number of processors and other resources that will speed up the individual cases.

Many other users, the majority, we think, have a problem that can easily be split up into many individual cases, sometimes tens of thousands of them, and more than speeding up an individual case, they want to get the most cases processed in the shortest amount of time. For these users, understanding scalability is very important, and they should find the point at which the maximum efficiency is achieved. For this, consider the metric of processor-time per case; multiply the number of processors times the time consumed per case, and minimize this value.

Scalability Example

Let's give an example of the very common sublinear scalability. Let's assume that you have access to 4 processors total, and that you have 8 total cases to process. Assume also that you're most concerned about getting all the cases through as fast as possible, but not really concerned about speeding up each individual case. Finally, assume that each of your cases takes the following amount of time to run:

| Processors per case | Running time (minutes) |

| 1 | 60 |

| 2 | 45 |

| 4 | 30 |

Since you're concerned about the total amount of running time for all the cases as a whole, you look at the metric of processor-time per case:

| Processors per case | Running time (minutes) | Processor-minutes consumed per case |

| 1 | 60 | 60 |

| 2 | 45 | 90 |

| 4 | 30 | 120 |

As you can see, even though it's the longest running time per case, using 1 processor per case is actually the most efficient.

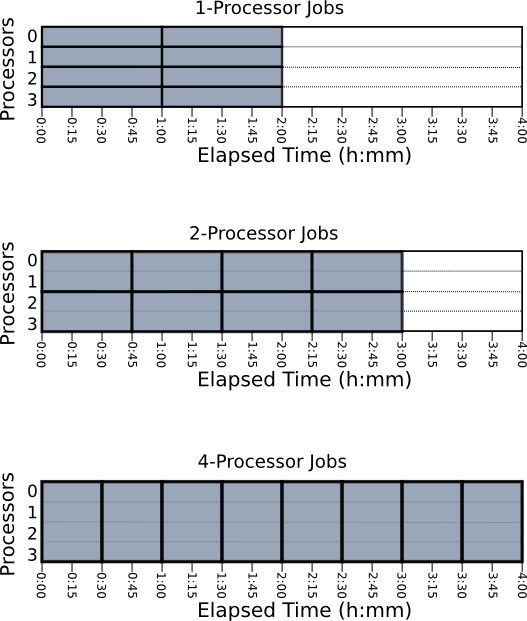

If you'd rather see this visually, look at the following diagrams that show what would happen if you ran these 8 cases with 1, 2, or 4 processors each:

As these diagrams show, even though using 1 processor takes the longest per case, the total time to get all 8 cases processed is the shortest when using 1 processor each.

Last changed on Fri Jul 8 12:09:38 2022